Introduction

The field of Retrieval-Augmented Generation (RAG) is rapidly evolving, addressing the limitations of traditional large language models by integrating real-time external information during the generation process. This article delves into the comprehensive development of RAG systems, examining their historical inception and highlighting the core architectural frameworks that define them. It also unveils advanced techniques shaping modern RAG, identifies persistent challenges, and forecasts future research avenues, offering developers critical insights into this transformative technology.

Tables of Contents

Chapter 1: Historical Development of Retrieval-Augmented Generation (RAG) Systems

- Foundations and Initial Advancements in RAG Systems

- Mitigating Hallucination: Navigating the Early Challenges in RAG Systems

- Innovative Synergies: Technical Breakthroughs and Contextual Integrations in RAG Systems

- Transforming Industries: How RAG’s Evolution Shapes Real-World Applications

- Future Perspectives: Navigating the Trajectory of RAG Evolution

Chapter 2: Core Architectural Frameworks in the Evolution of Retrieval-Augmented Generation (RAG)

- Tracing the Foundations and Transformations of RAG Core Architectures

- Integrative Structural Components in Advanced RAG Architectures

- Leveraging Core Architectural Frameworks in RAG for Enhanced Applications and Impacts

- Exploring Open-Source Frameworks: The Backbone of Modern RAG Systems

- Pioneering Future Paths: Next-Gen Architectural Advancements in RAG

Chapter 3: Advanced Techniques in Modern Retrieval-Augmented Generation (RAG)

- Harnessing Multi-Query Generation and Rank Fusion in RAG Systems

- Agentic Reasoning: Revolutionizing Multi-Step Retrieval in Modern RAG

- The Synergy of Multimodal Knowledge Structures in Modern RAG Techniques

- Optimizing Pipelines for Robust Retrieval-Augmented Generation Performance

- Self-Optimizing Systems: The Engine Behind Real-Time Knowledge Synthesis

Chapter 4: Challenges in the Evolution of Retrieval-Augmented Generation (RAG)

- Navigating the Maze of Data Quality in RAG Evolution

- Navigating Latency and Performance Overheads in RAG Systems

- Navigating Scalability Complexities in Retrieval-Augmented Generation Systems

- Navigating Security and Compliance Challenges in RAG Systems

- Unraveling Complexity: Debugging Opaqueness in RAG Systems

Chapter 5: Future Directions for Retrieval-Augmented Generation (RAG) Research

- Dynamic Knowledge Integration and Continuous Updates in RAG Systems

- Enhanced Retrieval Accuracy and Contextual Depth in the Future of RAG Research

- Elevating Trustworthiness and Explainability in Future RAG Systems

- The Next Frontier of Multimodal Retrieval-Augmented Generation

- Infrastructure and Performance Evolution in RAG Scalability

Chapter 1: Historical Development of Retrieval-Augmented Generation (RAG) Systems

1. Foundations and Initial Advancements in RAG Systems

Retrieval-Augmented Generation (RAG) systems emerged to counteract limitations in large language models (LLMs), such as hallucinations. By integrating real-time data retrieval, RAG systems moved beyond static architectures to hybrid models that access external knowledge dynamically. Early innovations focused on refining the interplay between retrieval methods and generative components, employing vector databases for swift document retrieval and semantic search techniques for relevant data filtering. This approach enabled RAG systems to support multi-hop reasoning and deliver accurate outputs without costly retraining. Initial applications demonstrated efficacy in high-stakes fields like medical diagnostics.

For more on RAG’s principles, see KongHQ on RAG’s Principles and Applications.

2. Mitigating Hallucination: Navigating the Early Challenges in RAG Systems

The evolution of Retrieval-Augmented Generation (RAG) systems was significantly driven by the need to mitigate hallucination phenomena in AI. These hallucinations, characterized by models generating false information, were a major challenge in early AI applications, notably in areas demanding high factual accuracy. RAG architectures emerged as a compelling solution, integrating verified external data into the generation process to ground responses. This fusion of retrieval and generation components ensured that outputs were not solely reliant on a model’s inherent biases or gaps. Over time, RAG continued to refine its mechanisms, enhancing the reliability of AI outputs across diverse fields, as detailed here.

3. Innovative Synergies: Technical Breakthroughs and Contextual Integrations in RAG Systems

The evolution of Retrieval-Augmented Generation (RAG) systems showcases significant technical strides and practical integrations. Early models grappled with challenges of accuracy and coherence, prompting the adoption of vector embeddings in indexing processes. This approach catalyzed the shift from static knowledge reliance to dynamic, data-driven outputs. Moreover, the emergence of Modular RAG enabled a more adaptable architecture, promoting enhanced multi-hop reasoning and the fusion of structured logic with unstructured data. This synergy broadens RAG’s contextual applications, paving the way for diverse industry implementations, as detailed in a guide on AI Automation Pro.

4. Transforming Industries: How RAG’s Evolution Shapes Real-World Applications

The maturation of Retrieval-Augmented Generation (RAG) systems has revolutionized diverse sectors by enabling AI to interact dynamically with current data, leading to numerous applications. In the realm of customer service, RAG equips AI with the agility to draw accurate, context-driven responses from external knowledge bases, optimizing customer interactions. For content creation, it empowers the generation of insightful, well-supported materials, creating a more informed and engaging output. Educational tools benefit from RAG’s data synthesis capabilities, crafting detailed, contextually-rich learning experiences. These advancements underscore RAG’s pivotal role in bridging the gap between static data training and dynamic, real-world application.

5. Future Perspectives: Navigating the Trajectory of RAG Evolution

As RAG systems advance, they promise profound transformations in high-stakes fields like healthcare and finance. The future heralds domain-specialized solutions, facilitating compliance and precision through traceable data sourcing. By 2025, autonomous AI agents are expected to revolutionize real-time data retrieval, while multi-source data fusion will further refine outputs. These trends reflect a crucial shift toward sophisticated, real-time applications, easing the path for industries under rigorous regulatory scrutiny to adopt these innovations (source).

Chapter 2: Core Architectural Frameworks in the Evolution of Retrieval-Augmented Generation (RAG)

1. Tracing the Foundations and Transformations of RAG Core Architectures

Retrieval-Augmented Generation (RAG) represents a profound step forward in AI, blending real-time data retrieval with the capacities of large language models to mitigate “hallucinations” and improve factuality. Central to its architecture is a sophisticated retriever and generator interaction, which dynamically enriches the model’s output with accurate external data. Over time, RAG has refined its retrieval capabilities to incorporate diverse, up-to-date sources, making it invaluable in fields requiring precision such as legal and medical services. For a deeper dive into RAG’s science, visit this comprehensive guide.

2. Integrative Structural Components in Advanced RAG Architectures

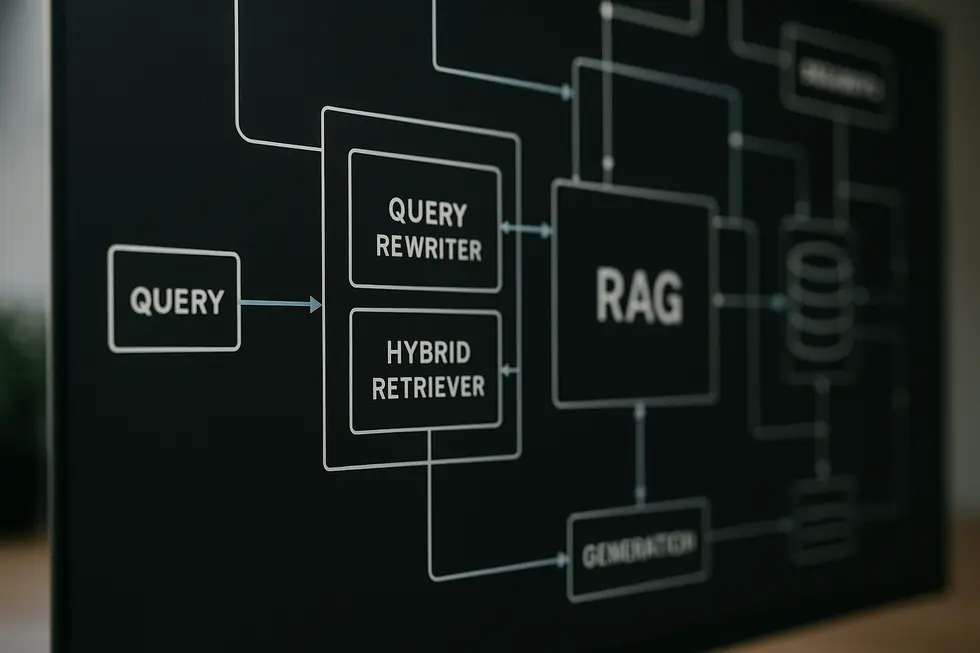

The Retrieval-Augmented Generation landscape intricately weaves two pipelines: Data Ingestion and Retrieval, complemented by a Generator Component and Supporting Infrastructure. Ingestion translates raw data into structured embeddings, optimized for context retrieval. The retrieval system fine-tunes queries through semantic search, amplifying augmented generation. Advanced integration ensures responses are logically consistent, harnessing recent innovations like dense vectors paired with keyword searches for precision. These components form the backbone of evolving RAG systems, underscoring a critical balance between structured logic and dynamic data handling. For more insights on implementing these frameworks, explore the RAG Blueprint Framework.

3. Leveraging Core Architectural Frameworks in RAG for Enhanced Applications and Impacts

At the heart of Retrieval-Augmented Generation (RAG) lies its architectural brilliance, effectively transforming generative language models by tethering them to external data repositories. This symbiosis fuels applications across industries, from customer service chatbots to sophisticated knowledge management systems. By reducing misinformation, RAG enhances factual accuracy, crucial in sectors like healthcare and finance. Learn more about RAG frameworks on Microsoft.

4. Exploring Open-Source Frameworks: The Backbone of Modern RAG Systems

Open-source frameworks form the scaffold for modern RAG systems, providing diverse, adaptable solutions. RAG Blueprint champions a modular development approach with embedded monitoring and flexible retrieval pipelines, promoting analytics-driven insights. Conversely, Shraga focuses on agility and minimalism, enabling rapid, cost-effective deployments. Vectara’s Open RAG Eval ensures robust evaluation standards crucial for refining RAG components. LlamaIndex and LangChain enhance indexing and orchestration, albeit with varied production complexities. As these frameworks evolve, they underpin agentic RAG solutions, signifying a pivotal shift in RAG’s precision and efficiency.

5. Pioneering Future Paths: Next-Gen Architectural Advancements in RAG

The landscape of Retrieval-Augmented Generation (RAG) is poised for profound transformation as it embraces next-generation architectural advancements. Central to this evolution is the integration of knowledge graphs and ontologies, which promise to enhance reasoning capabilities by structuring complex data relationships. Collaborations between specialized AI agents with diverse domain expertise are expected to revolutionize workflows by automating intricate tasks. This dynamic synergy signifies a pivotal shift towards more sophisticated and responsive RAG frameworks. For deeper insights, explore next-generation RAG architectures.

Chapter 3: Advanced Techniques in Modern Retrieval-Augmented Generation (RAG)

1. Harnessing Multi-Query Generation and Rank Fusion in RAG Systems

The integration of Multi-Query Generation and Rank Fusion enhances Retrieval-Augmented Generation (RAG) systems, improving accuracy in information retrieval. By generating multiple nuanced queries from a single input, RAG systems capture diverse perspectives, minimizing overlooked data. Combined with Rank Fusion, these queries are unified into a cohesive ranking, leveraging techniques like Reciprocal Rank Fusion to highlight consensus documents. This dual approach not only increases robustness by mitigating the limitations of individual retrieval methods but also ensures comprehensive contextual insights. For further exploration of these advanced methods, Foundations of Retrieval Augmented Generation (RAG) Systems provides foundational context and deeper understanding.

2. Agentic Reasoning: Revolutionizing Multi-Step Retrieval in Modern RAG

Agentic RAG systems have introduced a paradigm shift by utilizing autonomous agents for complex query handling and multi-source synthesis. These systems incorporate multi-step reflection loops to refine responses, ensuring coherence and relevance. Agents perform specialized tasks like query optimization and data validation, leveraging real-time decision-making. This method enhances response quality and precision, especially in domains demanding high accuracy. For a deeper dive, explore the Agentic RAG explained.

3. The Synergy of Multimodal Knowledge Structures in Modern RAG Techniques

Multimodal Retrieval-Augmented Generation (RAG) combines text, images, and structured data, enhancing AI’s contextual understanding. Hierarchical Multi-Agent Multimodal RAG (HM-RAG) leverages a layered agent model to refine answers by integrating diverse data types, achieving superior results in zero-shot settings. In Visual Question Answering (VQA), multimodal RAG uses both visual cues and textual retrieval to provide richer, more accurate answers. Explore HM-RAG’s detailed framework for more insights.

4. Optimizing Pipelines for Robust Retrieval-Augmented Generation Performance

In the realm of advanced Retrieval-Augmented Generation (RAG), pipeline optimization is paramount for achieving production-grade performance. Techniques such as query expansion, caching, and re-ranking play crucial roles in boosting retrieval efficiency. Additionally, pre-retrieval optimizations like precise data chunking further enhance the accuracy and coherence of outputs. This layered approach to pipeline optimization ensures that RAG systems deliver high-quality, contextually-rich responses essential for real-world applications. For more insights on enhancing RAG systems, visit key strategies for enhancing RAG effectiveness.

5. Self-Optimizing Systems: The Engine Behind Real-Time Knowledge Synthesis

In the ongoing evolution of Retrieval-Augmented Generation (RAG), autonomous systems have emerged as pivotal in achieving real-time knowledge synthesis. These self-optimizing systems enable dynamic adjustments within retrieval pipelines, harnessing reinforcement learning to fine-tune search queries and document selection. By leveraging hybrid retrieval engines, RAG systems combine dense vector searches with keyword matching, enhancing contextual document accuracy. Furthermore, iterative query rewriting processes ensure a refined semantic understanding, guided by LLM feedback, while immediate freshness validation of the collected data plays a crucial role in maintaining relevancy and reliability. For more insights, visit advancements in autonomous RAG systems. This seamless integration of components drives a transformative shift towards more efficient, accurate, and intelligent retrieval processes. More foundational information on RAG can be found at Foundations of RAG Systems.

Chapter 4: Challenges in the Evolution of Retrieval-Augmented Generation (RAG)

1. Navigating the Maze of Data Quality in RAG Evolution

In the realm of Retrieval-Augmented Generation (RAG), data quality is a formidable challenge. The fidelity of outputs is directly linked to the reliability of source data. Bias, incompleteness, and outdated information can skew results, undermining system credibility. Strategies like regular updates, data validation, and leveraging diverse sources are pivotal. Moreover, integrating automated evaluation tools can streamline data quality assurance. Explore more insights on RAG challenges to understand the evolving strategies comprehensively.

2. Navigating Latency and Performance Overheads in RAG Systems

Retrieval-Augmented Generation (RAG) presents significant latency and performance challenges, primarily due to its two-stage architecture. The retrieval phase requires speed in embedding queries and searching vector databases, influenced by database size and indexing efficiency. Challenges emerge as databases grow or indexing strategies become outdated. The generation phase adds further latency when large language models (LLMs) process prompts. Infrastructure choices, like Google Axion’s Arm Neoverse V2, can dramatically influence latency, highlighting hardware’s critical role.

Optimization strategies, such as embedding refinement and index optimization using hierarchical navigable small world (HNSW) graphs or caching, aim to alleviate these issues. Performance overheads further stem from error propagation risks, urging the need for robust monitoring tools to track metrics like recall rates and perceived latency. For deeper insights, explore this detailed overview of RAG optimization tools.

3. Navigating Scalability Complexities in Retrieval-Augmented Generation Systems

The scalability of RAG systems demands robust infrastructure due to the dual-stage architecture that mandates real-time data integration and seamless synchronization between components. Infrastructure complexities are compounded by the necessity of efficient vector databases and cost-effective storage. Additionally, managing current and accurate data poses significant challenges as systems scale, given the overhead of constant updates. Cost implications are notable, with high-throughput infrastructure needed for real-time retrieval contributing to operational expenses. Moreover, latency issues arise when retrieval introduces delays, and security concerns grow with scale, making strict compliance measures essential. These intricate demands highlight the need for adopting modular designs, optimizing algorithms, and ensuring data freshness within RAG systems.

4. Navigating Security and Compliance Challenges in RAG Systems

Retrieval-Augmented Generation (RAG) systems introduce notable security and compliance risks. Data poisoning emerges as a prevalent threat, where malicious inputs corrupt retrieval mechanisms, causing widespread error propagation. Another critical concern is information leakage, where data aggregation from diverse sources risks exposing sensitive information. Addressing these vulnerabilities involves robust data cleansing, access control implementations, and tailored safety protocols specific to RAG frameworks. For further insights, see Chitika’s guide on RAG risks.

5. Unraveling Complexity: Debugging Opaqueness in RAG Systems

The intricacy of RAG systems manifests in the debugging opaqueness encountered due to tightly intertwined components. Isolating errors is arduous as output quality dips can stem from several phases—document chunking or LLM misinterpretation. Moreover, slow feedback from pre-processing bottlenecks hinders agile refinement, aggravated by poor document retrieval quality. The absence of standardized debugging tools exacerbates these obstacles, underscoring the demand for real-time performance monitoring. More on the innovations enhancing RAG systems can be explored here.

Chapter 5: Future Directions for Retrieval-Augmented Generation (RAG) Research

1. Dynamic Knowledge Integration and Continuous Updates in RAG Systems

Retrieval-Augmented Generation (RAG) systems are constantly evolving to enhance their ability to integrate dynamic knowledge and process real-time updates. This integration is particularly critical in sectors like healthcare, where the amalgamation of LLMs with real-time data retrieval ensures decisions are informed by the latest research. RAG’s capacity to consistently adapt to new information reduces the need for frequent model updates, making AI applications more cost-effective and scalable. Read more on this transformation.

2. Enhanced Retrieval Accuracy and Contextual Depth in the Future of RAG Research

In improving RAG systems, enhancing retrieval precision and contextual relevance is paramount. The focus lies on developing algorithms that accurately identify relevant information for enriching the generation process. Innovations like hybrid retrieval, integrating dense vectors with keyword search, promise a leap in accuracy. As RAG systems advance, they hold the potential to revolutionize fields by ensuring reliability and trustworthiness in AI outputs. More insights on AryaXai.

3. Elevating Trustworthiness and Explainability in Future RAG Systems

Trustworthiness and Explainability Innovations in Retrieval-Augmented Generation are pivotal for the evolution of RAG research. Dynamic knowledge grounding integrates real-time data adjustments to eliminate outdated intel. Multi-source verification enhances factual accuracy, while bias mitigation frameworks ensure fair content retrieval. Explainability grows with retrieval attribution mapping and uncertainty quantification, providing detailed provenance for each response and outlining confidence intervals, ensuring user trust and clarity across complex queries source.

4. The Next Frontier of Multimodal Retrieval-Augmented Generation

In the rapidly evolving landscape of Retrieval-Augmented Generation (RAG), expanding multimodal capabilities marks a significant future direction. Cross-modal alignment is pivotal, where adept systems like HM-RAG dynamically synchronize text, images, and audio through robust embedding and fusion techniques. Equally crucial is the development of scalable indexing strategies, facilitating enterprise-level performance in handling diverse data. As RAG systems advance, domain-specific applications such as healthcare and IoT present promising, regulated pipelines for targeted innovations in multimodal data processing. Future directions hold the key to unlocking the full potential of multimodal RAG.

5. Infrastructure and Performance Evolution in RAG Scalability

As RAG systems evolve, future scalability hinges on cloud-native architectures enabling seamless horizontal scaling. Enterprise adoptions will benefit from auto-scaling algorithms that optimize resource usage based on query demand. Caching and approximate nearest neighbor algorithms will minimize latency while maintaining performance across vast data scales. Additionally, hybrid retrieval models will enhance retrieval precision. Learn more about RAG optimization tools.

Final thoughts

The ongoing evolution of Retrieval-Augmented Generation marks substantial progress toward robust AI systems that adeptly utilize real-time data. By understanding the historical context and core frameworks, exploring modern techniques, and addressing pressing challenges, developers can appreciate its profound impact and envisage its promising future. These insights underline RAG’s potential to transcend traditional AI limitations, guiding developers in harnessing and advancing these systems.

Ready to elevate your business with cutting-edge automation? Contact AI Automation Pro Agency today and let our expert team guide you to streamlined success with n8n and AI-driven solutions!

About us

AI Automation Pro Agency is a forward-thinking consulting firm specializing in n8n workflow automation and AI-driven solutions. Our team of experts is dedicated to empowering businesses by streamlining processes, reducing operational inefficiencies, and accelerating digital transformation. By leveraging the flexibility of the open-source n8n platform alongside advanced AI technologies, we deliver tailored strategies that drive innovation and unlock new growth opportunities. Whether you’re looking to automate routine tasks or integrate complex systems, AI Automation Pro Agency provides the expert guidance you need to stay ahead in today’s rapidly evolving digital landscape.